Text Analysis in the Age of AI has reshaped how organizations interpret the vast, messy world of text. From emails and chat transcripts to social media posts and sensor logs, unstructured data contains a wealth of insights that traditional analytics often miss. The shift to AI-powered text analysis promises faster, deeper understanding, enabling better customer experiences, smarter product development, and more informed risk management. By combining natural language processing, machine learning for text, and text mining, teams can turn unstructured data into structured signals and apply semantic analysis at scale. This article explores techniques, challenges, and practical best practices for extracting meaningful signals from text in the AI era.

Looking through an LSI-inspired lens, AI-driven text analytics surface latent topics and semantic connections across large document collections. Alternative terms—such as unstructured data interpretation, NLP-powered document analysis, and semantic analysis-driven insights—help connect concepts like sentiment, topics, and entities. These approaches rely on topic modeling, vector representations, and contextual embeddings to reveal patterns beneath raw words. By pairing traditional linguistic techniques with modern AI, organizations can map relationships between ideas and extract meaningful signals without relying on rigid schemas. In short, the landscape shifts from simple keyword hits to a network of related concepts that informs strategy across customer experience, risk, and product development.

What is Text Analysis in the Age of AI, and how does it leverage unstructured data and natural language processing to extract insights?

Text Analysis in the Age of AI uses natural language processing and machine learning for text to transform unstructured data—emails, chat transcripts, social posts—into structured signals. Through preprocessing, tokenization, and representations like embeddings, organizations perform sentiment analysis, topic modeling, named entity recognition, and semantic analysis at scale, enabling faster, deeper insights while supporting governance.

How do semantic analysis and transformer-based models in Text Analysis in the Age of AI enhance tasks with unstructured data such as sentiment detection, topic modeling, and entity extraction?

Semantic analysis paired with transformer-based models (for example, BERT, RoBERTa, GPT-family) captures nuanced meaning in unstructured data, improving NLP tasks such as sentiment analysis, topic modeling, and named entity recognition. By combining NLP with text mining and contextual embeddings, teams gain more accurate insights and better alignment with business goals, while maintaining interpretability and governance.

| Theme |

Key Points |

Impact / Why It Matters |

| Landscape of Unstructured Data in the AI Era |

- Enterprises generate more text than ever from emails, chat transcripts, social posts, reviews, support tickets, and system logs.

- Much of this data is unstructured and lacks a fixed schema, making traditional relational databases less effective.

- AI-powered text analysis enables personalization, trend detection, risk management, compliance, and competitive intelligence.

|

- Unlocks deeper customer insights and experiences.

- Supports proactive risk management and strategic decision‑making.

- Enables informed product development and competitive intelligence.

|

| Core Techniques and Workflows |

- Data collection from diverse sources; preprocessing steps such as cleaning, tokenization, and normalization.

- Representations include bag-of-words, TF‑IDF, and contextual embeddings from transformer models (e.g., BERT, RoBERTa, GPT-family).

- Tasks cover sentiment analysis, named entity recognition (NER), topic modeling, clustering, semantic analysis, summarization, and translation.

- Practical approach often combines traditional NLP with AI tools; e.g., baseline TF‑IDF for topics plus transformer refinements for classification.

|

- Provides a scalable, interpretable pipeline for extracting actionable insights from text.

- Maintains governance and explainability while leveraging AI gains.

|

| The Role of AI and Large Language Models |

- LLMs accelerate understanding, enable zero-shot or few-shot learning, and reduce labeling needs.

- Requires careful model selection, prompt design, and ongoing monitoring to minimize bias and errors.

- A robust approach blends traditional NLP with LLMs: preprocessing, precise evaluation, and governance.

|

- Faster, more flexible text analysis with governance to ensure fairness and compliance.

|

| Applications Across Industries |

- Customer support: analyze chat transcripts and emails to identify issues, churn risk, and proactive outreach.

- Product development: extract insights from reviews and surveys to guide features and UX.

- Finance and regulatory: monitor communications for risk signals and compliance breaches.

- Healthcare and life sciences: extract structured insights from clinical notes, papers, and patient feedback; surface adverse events.

- Marketing and brand: track sentiment trends and topic evolution to inform campaigns.

|

- Enables informed decision-making and faster, more effective strategies across sectors.

|

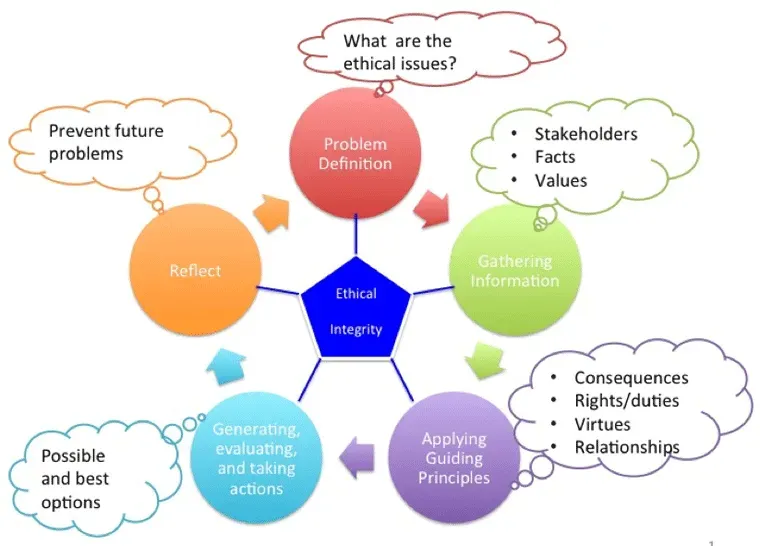

| Challenges, Ethics, and Governance |

- Data quality, privacy concerns, and bias in training data.

- Interpretability: need dashboards, model cards, and human‑in‑the‑loop validation.

- Evaluation metrics must align with the task (e.g., precision/recall for classification; ROUGE/BLEU for summaries; human judgments for nuanced semantics).

|

- Builds trust, helps ensure compliance, and supports responsible deployment.

|

| Best Practices for Effective Text Analysis |

- Define clear objectives and success criteria before starting.

- Establish data governance and privacy-preserving workflows.

- Start with a strong baseline using conventional NLP before moving to advanced AI models.

- Use contextual representations, favoring transformer-based embeddings when semantic understanding is essential.

- Evaluate with task-appropriate metrics (accuracy, F1, ROUGE, BLEU, topic coherence, etc.).

- Promote interpretability with explanations around model outputs.

- Iterate and monitor performance, drift, and fairness over time.

- Plan for governance and ethics: document provenance, limitations, and remediation steps.

|

- Increases reliability, governance, and overall impact of text analysis initiatives.

|

| The Road Ahead: Future Trends in Text Analysis |

- Retrieval-augmented generation and multimodal text analysis are expanding capabilities.

- Real-time or streaming text analysis enables proactive responses and dynamic decisions.

- Zero-shot or few-shot deployments tackle niche tasks with minimal labeling.

- Semantic analysis will deepen integration with structured data, linking textual signals to KPIs.

|

- Expect greater speed, scope, and integration across business metrics.

|

| Conclusion (Takeaways) |

- Text Analysis in the Age of AI enables turning unstructured observations into strategic actions.

- Combines NLP, machine learning for text, text mining, and semantic analysis with governance and ethics.

- Yields improved decision‑making, faster innovation, and better customer experiences across industries.

|

- Sets the foundation for ongoing adoption and competitive advantage via insights from unstructured data.

|

HTML table summarizing the key points of the base content in English. After the table, a descriptive conclusion summarizes the topic and highlights the importance of Text Analysis in the Age of AI for SEO and clarity.